Attack Kab Review

22 Sep 2025Attacking Knowledge Acquisition Bottleneck: frameworks, algorithms and applications

1. Introduction

The Knowledge Acquisition Bottleneck (KAB) represents a fundamental and pervasive challenge across artificial intelligence (AI), significantly impeding the development of truly autonomous and intelligent systems. Historically, the KAB emerged prominently in the 1970s and 80s with early expert systems, characterized by the prohibitive manual effort, reliance on human experts, and substantial cost associated with hand-encoding knowledge into formal, rule-based representations [52,59,75]. This foundational problem persists, having evolved in complexity and manifestation with successive advancements in AI paradigms [47].

In contemporary AI, a comprehensive definition of the KAB incorporates the challenge of “dynamic knowledge integration” and the critical distinction between “acquired” and “utilized” knowledge, especially evident in modern Pre-trained Language Models (PLMs). While PLMs acquire vast amounts of parametric knowledge during pre-training, a significant “knowledge acquisition-utilization gap” exists, where the mere presence of knowledge within a model’s parameters does not guarantee its effective and flexible deployment in downstream tasks [34,53]. This gap is further exacerbated by the static nature of deployed LLMs, which struggle to dynamically update and adapt to constantly evolving information, frequently leading to outdated knowledge, hallucinations, and overall unreliability in knowledge-intensive applications [5,20,27,36]. Dynamic knowledge integration, therefore, refers to an AI system’s ability to continuously learn, update, and reconcile new information with its existing knowledge base, a capability still largely nascent in current static models [67].

The pervasive nature of the KAB is evident across various AI subfields, manifesting in distinct yet interconnected ways, each defined by specific underlying assumptions, theoretical foundations, architectural choices, and domain-specific adaptations. For instance, in Knowledge Representation (KR) and Knowledge Graph (KG) construction, the KAB is marked by extensive manual effort, data scarcity, semantic heterogeneity, and scalability issues [3,23,55]. In contrast, robotics faces KABs related to interpreting sensor data, engineering explicit models for physical interactions, tractability of planning, and the need for dynamic knowledge in variable environments [64]. For Large Language Models (LLMs), new facets of the KAB include computational cost, massive data requirements, interpretability issues concerning how knowledge is internalized, catastrophic forgetting, and ineffective knowledge injection mechanisms [7,8,12,32,34,44]. This contrasts with KGs, which, despite their accuracy and traceability, grapple with scalability and manual updating [3,40]. In Reinforcement Learning (RL), the KAB centers on efficient exploration in sparse reward environments [56], whereas in educational AI, it involves accurately modeling student knowledge and translating it into interpretable, actionable feedback for educators [22,50]. Furthermore, the KAB differentiates between constructive contexts, such as building AI for beneficial applications with challenges like data scarcity and interpretability [2,19], and adversarial contexts, exemplified by model extraction attacks against proprietary AI models [15,45].

The timeliness and significance of this survey are underscored by the critical role that effective knowledge acquisition plays in advancing AI capabilities, transforming AI from narrow, data-driven systems into more robust, interpretable, and adaptable intelligent agents [19,32,70]. The proliferation of LLMs and advancements in Natural Language Processing (NLP) have paradoxically amplified the prominence and urgency of the KAB. While LLMs exhibit expansive knowledge coverage and impressive generalization capabilities [12], they introduce new facets of the KAB related to their inherent limitations, such as outdated or static knowledge, susceptibility to hallucinations, domain-specific deficits, and poor transferability across specialized tasks [20,70]. This necessitates continuous learning and adaptation to dynamic world knowledge, a task where LLMs frequently struggle, especially in later pretraining stages where new knowledge acquisition becomes increasingly difficult, as observed through metrics like “knowledge entropy” [69]. Moreover, the growing reliance on AI in sensitive domains like healthcare and scientific discovery demands interpretability, trustworthiness, and robustness, which are often hampered by the KAB [48,50].

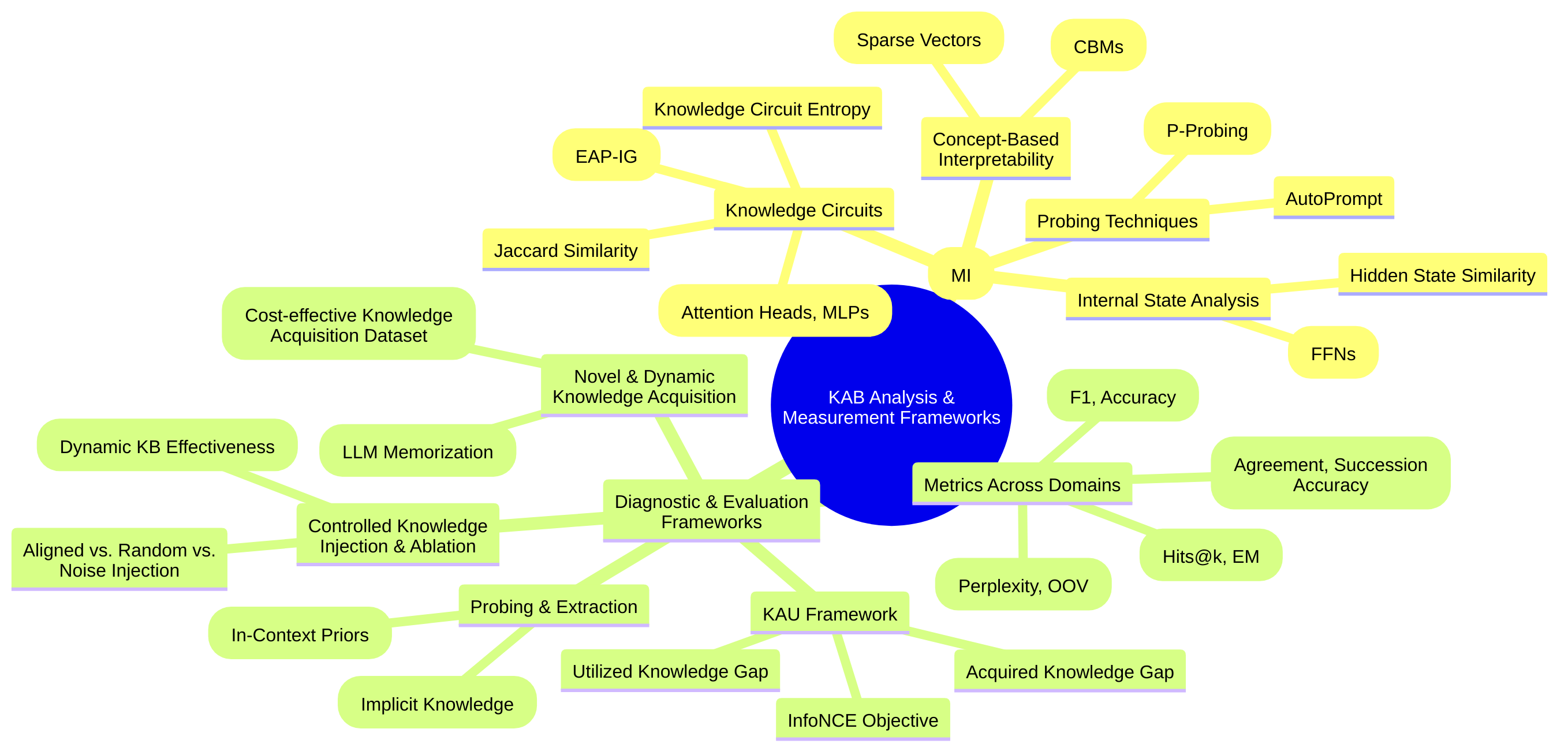

Beyond developing solutions, it is crucial to both understand and rigorously measure the KAB, a perspective explicitly highlighted in the context of assessing knowledge utilization in PLMs [53]. Diagnostic and analytical studies, such as those employing controlled datasets to study memorization in LLMs [60], or proposing novel metrics like knowledge entropy [69], are indispensable for guiding future research. The establishment of standardized benchmarks, exemplified by Bench4KE for automated competency question generation in Knowledge Engineering (KE) [14], is fundamental for systematic evaluation and comparison of methods addressing the KAB.

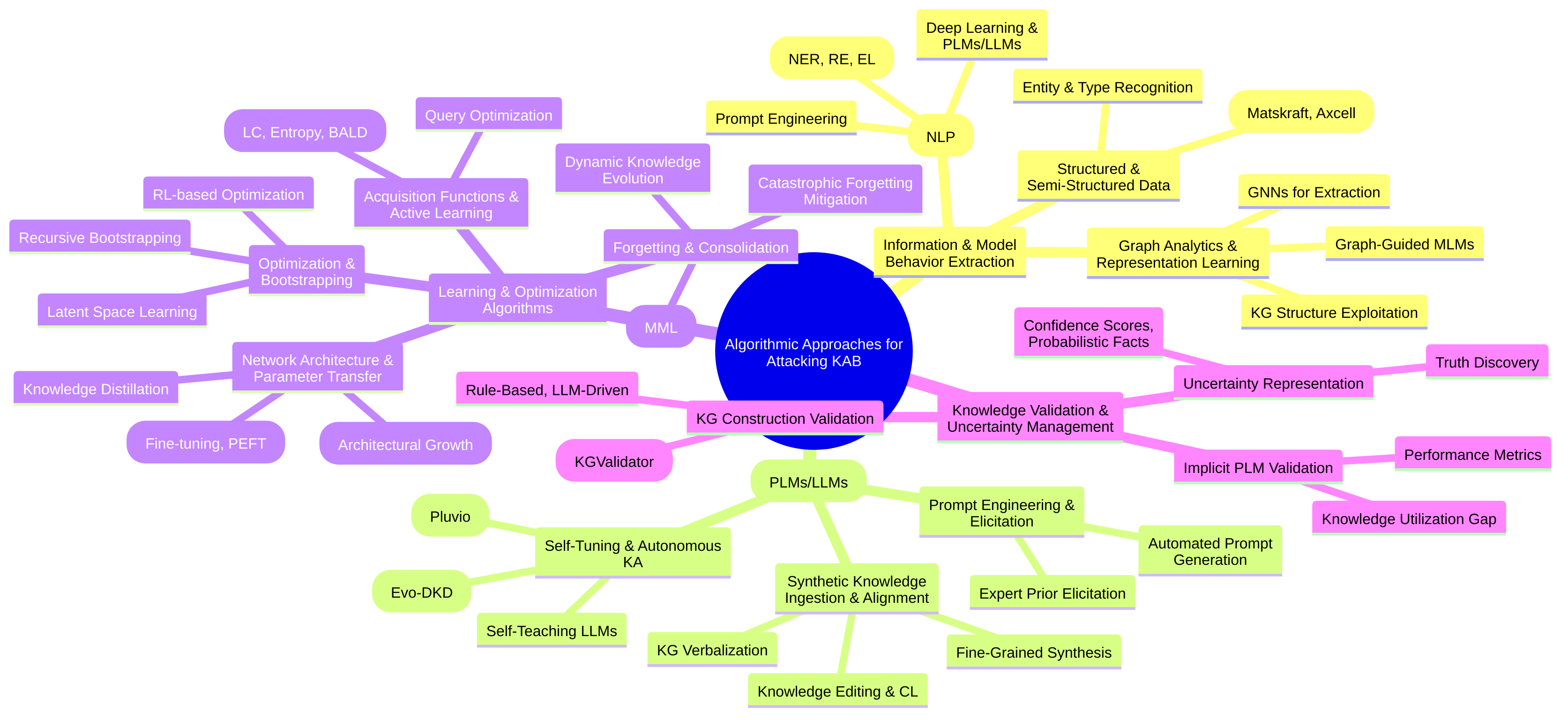

This academic survey, titled “Attacking Knowledge Acquisition Bottleneck: frameworks, algorithms and applications,” delineates the current research landscape by organizing it around several main themes: frameworks, algorithms, and applications, with a critical examination of their constructive and adversarial dimensions. The survey covers architectural designs and systematic approaches (frameworks), specific computational methods (algorithms) for diverse tasks like information extraction, skill discovery, and knowledge injection, and their applications across various domains including LLMs/NLP, robotics, game AI, and sensitive fields. It also differentiates between constructive efforts for beneficial AI and defensive strategies against adversarial knowledge acquisition, such as model extraction attacks [18,19,20,21,28,35,43,44,45,51,54,56,63,66,70].

The primary contribution of this survey is to organize the current, often fragmented, research landscape by systematically comparing, contrasting, and synthesizing insights from diverse approaches to the KAB. It explicitly identifies the underlying assumptions, theoretical foundations, architectural choices, and domain-specific adaptations that contribute to the observed strengths, weaknesses, and performance differences between methods. A recurring insight, for instance, is the convergence of neuro-symbolic AI, where combining LLMs’ expansive but implicit knowledge with KGs’ structured and interpretable representations can mitigate hallucinations and enhance reasoning [12,70]. Conversely, purely fine-tuned models can often outperform LLM-based in-context learning in specific tasks, indicating a comparative weakness in generalizability for direct application [18]. Critically, the survey also assesses the limitations and potential areas for improvement. Many current solutions, while effective in specific contexts, face challenges in generalizability, scalability, computational cost, and data dependency [17,37,43,44]. Furthermore, ethical considerations, bias mitigation, and the trade-offs between model size and knowledge integration capabilities remain largely unaddressed in many specialized studies [69]. Overcoming the KAB necessitates interdisciplinary research, moving beyond isolated solutions to foster frameworks that combine diverse knowledge representations, dynamic learning mechanisms, and enhanced human-AI collaboration. This includes developing new methodologies for data-efficient learning, enabling systems to dynamically update knowledge, bridging the gap between acquired and utilized knowledge, and improving the interpretability and explainability of AI’s internal knowledge processes. By pinpointing these limitations and unaddressed questions, this survey aims to highlight future research directions that are crucial for developing more robust, efficient, and ethical AI systems.

1.1 Defining the Knowledge Acquisition Bottleneck

The Knowledge Acquisition Bottleneck (KAB) represents a fundamental and pervasive challenge across artificial intelligence (AI), impeding the development of truly autonomous and intelligent systems. Fundamentally, the KAB describes the inherent difficulties and resource-intensive processes involved in enabling AI systems to effectively acquire, integrate, represent, maintain, and apply knowledge from diverse sources for robust and generalizable performance [47]. Historically, this bottleneck first emerged prominently in the 1970s and 80s with early expert systems, characterized by the prohibitive manual effort, reliance on human experts, and cost associated with hand-encoding knowledge into formal, rule-based representations [52,59,75]. This foundational problem persists, evolving in complexity and manifestation with successive advancements in AI paradigms.

A comprehensive definition of the KAB must incorporate the challenge of “dynamic knowledge integration” and the critical distinction between “acquired” and “utilized” knowledge, especially evident in modern Pre-trained Language Models (PLMs) [34,53]. While PLMs acquire vast amounts of parametric knowledge during pre-training, there exists a significant “knowledge acquisition-utilization gap,” where the mere presence of knowledge within a model’s parameters does not guarantee its effective and flexible deployment in downstream tasks [53,68,73]. This gap is further exacerbated by the static nature of deployed LLMs, which struggle to dynamically update and adapt to constantly evolving information, frequently leading to outdated knowledge, hallucinations, and overall unreliability in knowledge-intensive applications [5,20,27,36]. Dynamic knowledge integration, therefore, refers to an AI system’s ability to continuously learn, update, and reconcile new information with its existing knowledge base, a capability still largely nascent in current static models [67].

Comparative KAB Manifestations in Paired AI Subfields

| AI Subfield Pair | Key KAB Manifestations (AI-1) | Key KAB Manifestations (AI-2) | Core Contrast/Distinction |

|---|---|---|---|

| KR & KG vs. Robotics | Manual effort, Data scarcity, Semantic heterogeneity, Scalability, Error propagation | Sensor data interpretation, Explicit physical models, Planning tractability, Dynamic knowledge, Data scarcity, Environmental variability | Structure/content of knowledge vs. Operational acquisition/application in physical environment |

| LLMs vs. Knowledge Graphs (KGs) | Computational cost, Massive data requirements, Interpretability, Catastrophic forgetting, Ineffective knowledge injection, Hallucinations, Static nature, Acquisition-utilization gap | Manual effort for construction/update, Scalability, Limited extrapolation/reasoning transferability, Data heterogeneity, Incompleteness | Implicit/broad/general vs. Explicit/structured/precise |

| Reinforcement Learning (RL) vs. Educational AI | Efficient exploration in sparse rewards, Acquiring behavioral primitives, Costly/unsafe physical exploration | Modeling student knowledge from diverse materials, Interpretable/actionable feedback, Distinguishing knowledge gain, Hallucinations in LLM explanations | Efficient policy/behavior acquisition vs. Interpretable human learning models |

| Constructive Contexts vs. Adversarial Contexts | Manual effort, Data scarcity, Scalability, Interpretability, Building beneficial AI | Model extraction attacks, Limited query budgets, Lack of domain knowledge, Ethical/security implications (IP theft) | Building AI for benefit vs. Exploiting AI for unauthorized knowledge acquisition |

The KAB’s pervasive nature is evident across various AI subfields, manifesting in distinct yet interconnected ways, each defined by specific underlying assumptions, theoretical foundations, architectural choices, and domain-specific adaptations.

1. Knowledge Representation vs. Robotics:

In the realm of Knowledge Representation (KR) and Knowledge Graph (KG) construction, the KAB is historically rooted in the difficulty of creating and maintaining formal, structured knowledge. This involves extensive manual effort, cost, and reliance on human experts for knowledge elicitation, conceptualization, and formalization [3,31,52,55]. Modern KG construction still grapples with data scarcity of high-quality graph-structured data, error propagation in multi-stage pipelines, semantic heterogeneity across diverse sources, and scalability issues when dealing with large, dynamic information landscapes [23,38,40,55]. The “Open World problem” further highlights the KAB’s facet in the difficulty of distinguishing new from old knowledge and integrating it without catastrophic forgetting, especially when feature distributions differ significantly between known and unknown entities [37].

Conversely, in robotics, the KAB is deeply intertwined with embodied intelligence and real-world interaction [64]. It manifests through a complex interplay of: (1) a core Knowledge Bottleneck requiring human experts to manually interpret sensor data and provide explicit domain knowledge for tasks like object classification; (2) an Engineering Bottleneck stemming from the substantial time and effort required to implement and generate explicit models for robot dynamics and environmental interactions, especially for complex physical properties; (3) Tractability issues arising from the computational complexity of realistic planning problems, leading to slow response times; and (4) Precision challenges in executing plans with sufficient accuracy, particularly with flexible robots [64]. Additionally, data scarcity for real-world training, environmental variability with unpredictable noise, and the necessity for dynamic knowledge due to changing robot properties (e.g., wear, temperature) further amplify the KAB in robotics. While knowledge representation focuses on the structure and content of knowledge itself, robotics emphasizes the operational acquisition and application of knowledge within a physical, dynamic environment.

2. LLMs vs. Knowledge Graphs:

The KAB in Large Language Models (LLMs) contrasts sharply with that in Knowledge Graphs (KGs), highlighting fundamental differences in their knowledge paradigms [12]. For LLMs, KABs extend beyond the acquisition-utilization gap to include the computational cost and massive data requirements for pre-training, which pose significant challenges for deployment on resource-constrained systems [8]. A critical aspect is the “critical gap in understanding how LLMs internalize new knowledge” and structurally embed this acquired knowledge in their neural computations, affecting learning and retention dynamics, as quantified by concepts like knowledge entropy decay during pre-training [5,30,34,69]. This interpretability challenge extends to the lack of transparent reasoning, debugging capabilities, and control over LLM behavior, which affects reliability and safety [32]. Furthermore, LLMs face catastrophic forgetting during fine-tuning and struggle with effective knowledge injection from external KGs, sometimes treating relevant injected knowledge as noise due to ineffective knowledge utilization or knowledge complexity mismatch [7,42,44,61]. Eliciting knowledge from LLMs also presents a KAB due to manual effort in prompt engineering and the sensitivity to phrasing [43]. When integrating LLMs with structured knowledge like KGs (e.g., in Retrieval-Augmented Generation, RAG), an architectural mismatch arises because most RAG pipelines are designed for unstructured text, limiting their applicability to holistic graph structures and leading to a knowledge acquisition-utilization gap where LLMs lack substantial pre-training on graph data [1,63]. LLMs’ inherent struggle to maintain structured consistency and susceptibility to hallucinations also pose a KAB for LLM-driven ontology evolution [17].

In contrast, KGs are characterized by high accuracy, controlled reasoning, traceable origins, and human-centric interpretability [12]. However, KGs face their own KABs related to scalability (e.g., model size and inference time grow linearly with KG size), limited extrapolation, reasoning transferability, and the manual effort required for continuous updating and validation, particularly for emerging entities and relations [3,23,40,49]. LLMs’ strength in broad coverage and generalization, and KGs’ strength in structure and precision, illustrate a “cognitive gap” in how knowledge is stored and organized, highlighting the trade-off in the KAB for each paradigm [12].

3. Reinforcement Learning (RL) vs. Educational AI:

The KAB manifests distinctly in RL and educational AI, reflecting their differing learning objectives and environments. In RL, the KAB primarily concerns the difficulty of efficient exploration and knowledge acquisition in environments characterized by sparse reward signals [35,56]. Agents struggle to discover effective policies without clear and frequent reward feedback, or to acquire useful behavioral primitives for navigating vast state-action spaces. This often necessitates physical exploration, which can be costly or unsafe. The core problem is bridging the gap between minimal environmental feedback and the complex knowledge needed for optimal behavior.

Conversely, in educational AI, the KAB centers on the challenge of accurately modeling student knowledge from diverse learning materials and translating this into interpretable, actionable feedback for educators [22,50]. Traditional knowledge tracing methods exacerbate this KAB by relying on opaque, high-dimensional latent vectors, which provide limited cognitive interpretability or concrete guidance for teaching strategies. The difficulty arises from distinguishing knowledge gain from gradable versus non-gradable learning activities, and confounding factors like student selection bias [22]. LLM-based explanations, while promising, are susceptible to hallucination, hindering reliability as educational tools [50]. The KAB here is about transforming internal system representations of knowledge into human-understandable and pedagogically useful insights.

4. Constructive vs. Adversarial KAB:

The KAB also differentiates between constructive and adversarial contexts. Constructively, the KAB encompasses the traditional challenges of manual effort, data scarcity (e.g., for labeled data, specialized domain knowledge, or sense-annotated corpora), scalability (e.g., maintaining large knowledge bases, handling scientific information overload), and interpretability in building AI systems for beneficial applications [2,6,10,11,14,16,19,21,25,28,39,41,48,51,57,65,70,72]. This includes the challenge of translating scientific research into accessible knowledge or making ML models’ predictions scientifically insightful [2,39].

In contrast, an adversarial KAB describes the challenge faced by an adversary in efficiently acquiring the functional knowledge of a proprietary model (e.g., a Machine Learning as a Service (MLaaS) model) under practical constraints like limited query budgets and lack of domain knowledge [15,45]. This form of KAB involves ethical and security implications, including copyright violations, patent infringement, and financial and reputational damage to model owners. The underlying problem is reconstructing an accurate substitute model with minimal interaction.

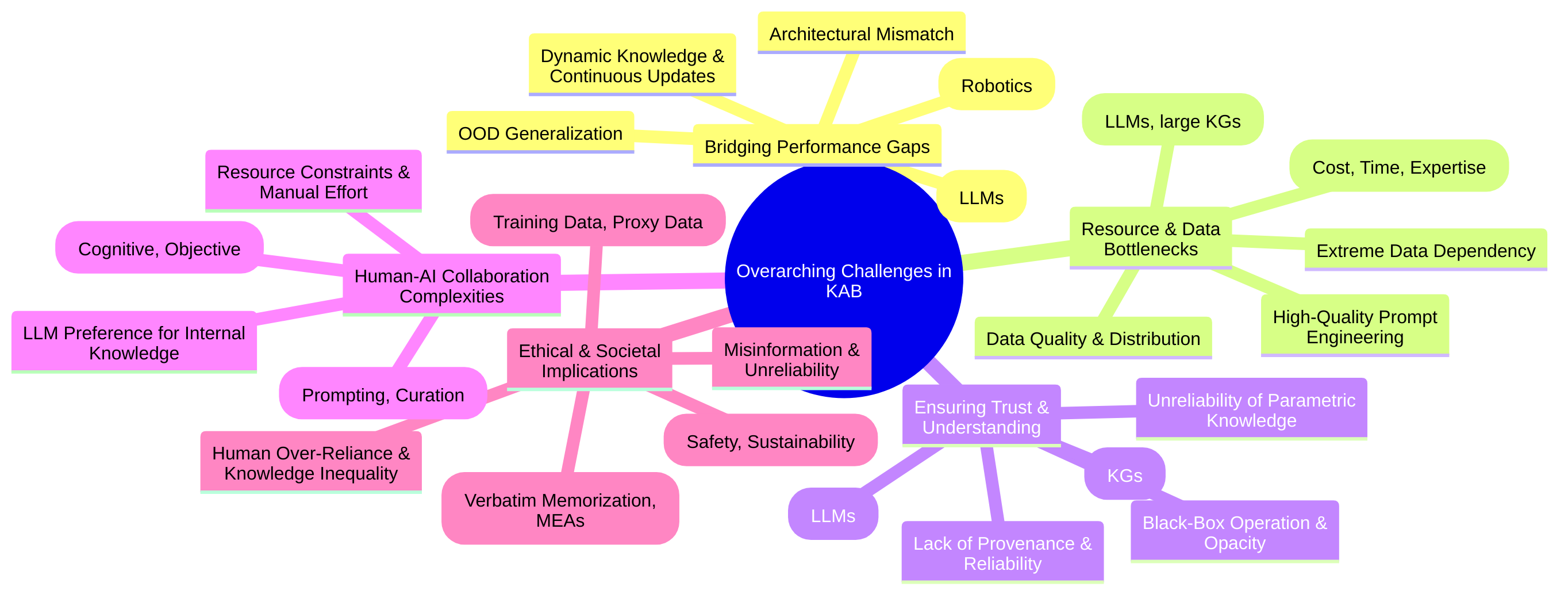

Implications for Solutions and Future AI Systems: The diverse manifestations of the KAB across domains underscore that there is no single, universal solution; rather, a spectrum of tailored and often interdisciplinary approaches is required. Addressing this bottleneck is crucial for advancing AI systems towards greater autonomy and intelligence because it directly impacts their:

- Reliability and Trustworthiness: Systems prone to hallucinations, outdated knowledge, or inability to generalize become unreliable for real-world applications in critical domains [20,32].

- Scalability and Efficiency: Manual efforts and high computational costs limit the deployment and continuous adaptation of AI models to vast, dynamic datasets and complex environments, hindering their practical utility [3,8,44,62].

- Generalization and Adaptability: AI systems must move beyond task-specific training to effectively acquire and transfer knowledge across diverse problem settings and adapt to unforeseen changes in dynamic open worlds [24,33,37,47].

- Interpretability and Actionability: Understanding how AI systems acquire and use knowledge, and translating that into human-understandable insights, is vital for high-stakes domains like healthcare, education, and scientific discovery, bridging the “human-AI knowledge gap” [2,22,32,39,50,54].

- Robustness and Security: The KAB can expose systems to vulnerabilities, as seen in adversarial model extraction, emphasizing the need for robust knowledge safeguards and secure deployment [15].

Overcoming the KAB necessitates interdisciplinary research, moving beyond isolated solutions to foster frameworks that combine diverse knowledge representations, dynamic learning mechanisms, and enhanced human-AI collaboration. This includes developing new methodologies for data-efficient learning, enabling systems to dynamically update knowledge, bridging the gap between acquired and utilized knowledge, and improving the interpretability and explainability of AI’s internal knowledge processes.

Limitations and Future Research:

Despite significant progress, several critical limitations and unaddressed questions persist within the current understanding and tackling of the KAB. Many papers define KAB implicitly within their specific domain, limiting the generalizability and comparative analysis of solutions across disparate AI fields [52,63,64,69]. A common deficiency is the lack of quantifiable metrics for KAB’s severity beyond qualitative descriptions of cost, time, or performance drops [47]. For LLMs, understanding the theoretical underpinnings of why they struggle with knowledge extraction after continued pre-training, or why knowledge entropy decays during pre-training, remains a key challenge [68,69]. The mechanisms by which LLMs “know what knowledge is outdated” and how to effectively bridge the “cognitive gap” between LLM knowledge storage and human understanding also remain largely unexplored [12,20]. Additionally, current efforts to address KABs often introduce new complexities, such as the computational cost of Monte Carlo Tree Search (MCTS)-based methods in RAG or the ineffective knowledge utilization in LLM fine-tuning when external knowledge is injected [7,27]. Future research should focus on developing standardized KAB metrics, exploring mechanistic explanations for observed KAB phenomena (especially in black-box models), and devising integrated, generalizable frameworks that address multiple facets of the KAB concurrently, thereby fostering the development of genuinely intelligent and adaptable AI systems.

1.2 Scope and Significance of the Survey

The knowledge acquisition bottleneck (KAB), traditionally understood as the difficulty in eliciting and encoding expert knowledge into AI systems, has emerged as a pervasive and increasingly urgent challenge across various domains of artificial intelligence. The timeliness and significance of this survey are underscored by the critical role that effective knowledge acquisition plays in advancing AI capabilities, transforming AI from narrow, data-driven systems into more robust, interpretable, and adaptable intelligent agents [19,27,32,50,69,70].

The evolution of AI, particularly the proliferation of Large Language Models (LLMs) and advancements in Natural Language Processing (NLP), has paradoxically amplified the prominence and urgency of the KAB. While LLMs exhibit expansive knowledge coverage and impressive generalization capabilities [12], they introduce new facets of the KAB related to their inherent limitations, such as outdated or static knowledge, susceptibility to hallucinations, domain-specific deficits, and poor transferability across specialized tasks [5,20,27,44,70]. This necessitates continuous learning and adaptation to dynamic world knowledge, a task where LLMs frequently struggle, especially in later pretraining stages where new knowledge acquisition becomes increasingly difficult, as observed through metrics like “knowledge entropy” [69]. Furthermore, the growing reliance on AI in sensitive domains like healthcare, scientific discovery, and critical infrastructure demands interpretability, trustworthiness, and robustness, which are often hampered by the KAB [19,32,48,50,58].

Beyond developing solutions, it is crucial to both understand and rigorously measure the KAB, a perspective explicitly highlighted in the context of assessing knowledge utilization in Pretrained Language Models (PLMs) [53]. Diagnostic and analytical studies, such as those employing controlled datasets like FictionalQA to study memorization in LLMs [60], or proposing novel metrics like knowledge entropy [69], are indispensable for guiding future research. The establishment of standardized benchmarks, exemplified by Bench4KE for automated competency question generation in Knowledge Engineering (KE) [14], is fundamental for systematic evaluation and comparison of methods addressing the KAB.

This academic survey delineates the scope of research into “Attacking the Knowledge Acquisition Bottleneck” by organizing it around several main themes: frameworks, algorithms, and applications, with a critical examination of their constructive and adversarial dimensions. The inclusion of these themes is justified by their pervasive relevance across the input papers:

- Frameworks: This survey covers architectural designs and systematic approaches that streamline knowledge acquisition. Examples include

StructSense, a modular framework for structured information extraction integrating symbolic knowledge and human-in-the-loop validation [70];AutoElicit, which leverages LLMs for expert prior elicitation in predictive modeling [19];Agent KB, designed for cross-domain experience sharing in agentic problem-solving [33]; and frameworks for incremental and cumulative knowledge acquisition that aim for lifelong learning [67]. The conceptualization of a “Large Knowledge Model” that merges LLMs and KGs also represents a significant framework perspective [12]. - Algorithms: The survey analyzes specific computational methods developed to tackle the KAB. These range from

AutoPrompt, an automated method for eliciting knowledge from LLMs without fine-tuning [43], toDiversity Induced Weighted Mutual Learning (DWML)for data-efficient language model pretraining on small datasets [8]. Other algorithms focus on skill discovery in reinforcement learning (e.g.,InfoBot) [56], dynamic knowledge base construction for LLM task adaptation (e.g.,KnowMap) [44], and cost-efficient expert interaction in sensitive domains (e.g.,PU-ADKA) [4]. - Applications: The scope extends to diverse application areas where the KAB is particularly acute.

- LLMs and Natural Language Processing (NLP): This forms a major focus, encompassing information extraction (e.g., Named Entity Recognition, Relation Extraction in biomedical domain [18], scholarly articles [51]), Knowledge Graph construction and population [3,11,31,49,66], Retrieval-Augmented Generation (RAG) for improving factual accuracy and reasoning [27,63,71], continual knowledge learning and updating for ever-changing world knowledge [20,68], and intrinsically interpretable LLMs [32].

- Robotics and Game AI: In robotics, the KAB manifests in efficient skill acquisition and transfer [35,56], behavioral learning in complex systems [64], and bridging subsymbolic sensor inputs with symbolic planning [28]. For game AI, it involves human-AI knowledge transfer and discovering super-human knowledge, as demonstrated in AlphaZero [54].

- Sensitive Domains: This includes clinical NLP for patient care [21], aerospace maintenance for trusted decision support [48], education for interpretable knowledge tracing [50], and materials science for accelerated discovery [65].

- Constructive and Adversarial Dimensions: This survey examines both benevolent efforts to acquire and integrate knowledge and defensive strategies against adversarial knowledge acquisition. While most papers focus on constructive knowledge acquisition, some directly address adversarial KABs related to AI security and intellectual property protection. For instance, research on model extraction attacks (MEAs) against Machine Learning as a Service (MLaaS) platforms highlights the threat of unauthorized model acquisition and the need for defense mechanisms [15,45]. The detection of vulnerable code and intellectual property infringements through assembly clone search also represents an adversarial dimension of KAB [24]. Furthermore, the challenge of building “Trustworthy LLMs” includes mitigating risks like jailbreaks and toxic content, which involves understanding and manipulating knowledge mechanisms to prevent malicious exploitation [30].

This survey’s primary contribution is to organize the current, fragmented research landscape by systematically comparing, contrasting, and synthesizing insights from diverse approaches to the KAB. It explicitly identifies the underlying assumptions, theoretical foundations, architectural choices, and domain-specific adaptations that contribute to the observed strengths, weaknesses, and performance differences between methods. For example, the convergence of neuro-symbolic AI is a recurring insight, with many works highlighting how combining LLMs’ expansive but implicit knowledge with KGs’ structured and interpretable representations can mitigate hallucinations and enhance reasoning in domains ranging from general knowledge to specialized scientific literature [12,36,66,70]. Conversely, purely fine-tuned models can often outperform LLM-based in-context learning in specific tasks, such as biomedical NER and RE, indicating a comparative weakness in generalizability for direct application [18].

Critically, the survey also assesses the limitations and potential areas for improvement or future research. Many current solutions, while effective in their specific contexts, face challenges in generalizability across domains (e.g., “quantum learning” demonstrated in pedestrian re-identification yet untested in LLMs or robotics [37]), scalability (e.g., simulating dual-decoder architectures due to GPU constraints in ontology evolution [17]), computational cost (e.g., dynamic knowledge base construction in KnowMap not fully quantified [44]), and data dependency despite efforts toward data efficiency [43,57]. Furthermore, ethical considerations, bias mitigation, and the trade-offs between model size and knowledge integration capabilities remain largely unaddressed in many specialized studies [69]. By pinpointing these limitations and unaddressed questions, the survey aims to highlight future research directions that are crucial for developing more robust, efficient, and ethical AI systems. The subsequent sections will delve into specific solutions within these themes, examining their technical details, empirical impact, and implications for a more comprehensive understanding of how to effectively attack the KAB.

2. Characterizing the Knowledge Acquisition Bottleneck

The Knowledge Acquisition Bottleneck (KAB) represents a fundamental and pervasive challenge across diverse fields of artificial intelligence, broadly defined as the inherent difficulty, cost, and inefficiency associated with collecting, representing, structuring, updating, and effectively utilizing knowledge within AI systems [3,44,52]. This bottleneck prevents AI systems from efficiently acquiring and leveraging the necessary information to operate adaptively, intelligently, and reliably in complex, real-world, and dynamic environments. It is not a monolithic problem but rather a multi-dimensional constraint space impeding AI’s cognitive capabilities.

To systematically characterize the KAB, we propose a theoretical framework that delineates its manifestations and underlying causes across four primary dimensions:

- Resource Constraints: This dimension encompasses the tangible limitations on knowledge acquisition, primarily driven by the extensive manual effort and high economic costs [19,21,41]. It includes the labor-intensive processes of data labeling, annotation, expert elicitation, and the significant computational costs associated with training and maintaining complex AI models, especially Large Language Models (LLMs) [44,64]. The scarcity of high-quality labeled data further exacerbates this constraint, hindering model training and generalization [3,25].

- Cognitive Constraints: This dimension refers to the limitations in AI models’ inherent abilities to process, represent, or reason with knowledge due to architectural designs or the complexity of the knowledge itself. Key issues include architectural mismatch between textual and structured representations, preventing effective integration of knowledge graphs with LLMs [12,42]. The opacity of AI systems, particularly LLMs, leads to interpretability bottlenecks, making it challenging to understand how knowledge is used or to generate human-level explanations [32,50]. Furthermore, a significant human-AI knowledge gap emerges when AI systems develop reasoning principles and concepts not readily understandable or leveraged by human experts [37,54].

- Dynamic Constraints: This dimension highlights the challenges associated with maintaining and adapting knowledge in evolving environments. A critical problem, especially for LLMs, is their reliance on static, pre-trained knowledge, leading to

outdated information,hallucinations, andknowledge forgettingwhen confronted with new data [5,44]. Catastrophic forgetting, where previously acquired knowledge is lost upon learning new tasks, is a pervasive issue, often linked to the decay ofknowledge entropyduring pre-training, which reduces the model’s plasticity for new knowledge acquisition [69]. - Epistemic Constraints: This dimension focuses on issues related to the quality, certainty, and generalizability of knowledge. Knowledge acquisition is frequently bottlenecked by environments characterized by inherent uncertainty, partial observability, and difficulties in generalizing knowledge beyond specific training distributions [37]. Data quality issues, such as annotation inconsistencies or incompleteness in structured knowledge bases, also lead to

knowledge incompletenessanddata sparsity, impacting system reliability and performance [25,55].

These KAB dimensions are often rooted in labor-intensive processes for knowledge engineering and annotation, which severely limit scalability and continuous updates [3]. Within LLMs, internal dynamics characterized by “knowledge circuits” and “knowledge entropy decay” underpin their ability, or inability, to integrate and retain information efficiently [34,69]. Furthermore, the “acquired knowledge gap” (what models know) and the “knowledge utilization gap” (how much of that knowledge they can effectively use) in pre-trained language models highlight critical limitations in current evaluation methodologies [53].

The manifestations of the KAB vary significantly across specific AI domains. For LLMs, this includes issues like insufficient knowledge utilization and hallucinations due to outdated or conflicting information [44,53]. Knowledge Graphs grapple with the manual effort for construction and the challenges of data heterogeneity and incompleteness [3]. In Natural Language Processing (NLP), particularly clinical applications, data scarcity and the high cost of human annotation are prevalent [21]. In MLaaS security, the KAB is framed by adversarial query costs and intellectual property concerns related to model extraction [15]. Binary code analysis struggles with the invariance to compilation nuisances and poor generalization to out-of-domain architectures [24]. Robotics and Reinforcement Learning face data scarcity, the reality gap, and the curse_of_dimensionality [64].

Across all these domains, persistent cross-cutting challenges include architectural mismatch, scalability limitations, data scarcity, issues of reliability and interpretability, and the difficulty of achieving Out-of-Distribution (OOD) generalization. Addressing these multifaceted challenges requires moving beyond incremental improvements toward developing novel frameworks and algorithms that prioritize efficiency, scalability, robustness, and interpretability in knowledge acquisition and utilization. Future research must focus on developing standardized, quantifiable metrics for KAB severity to enable robust comparative analyses and to provide clearer targets for mitigation efforts, ultimately aiming to bridge the critical human-AI knowledge gap [12,30].

2.1 Definition and Manifestations

The Knowledge Acquisition Bottleneck (KAB) represents a pervasive challenge across diverse fields of artificial intelligence, broadly defined as the inherent difficulty, cost, and inefficiency associated with collecting, representing, structuring, updating, and effectively utilizing knowledge within AI systems. This encompasses the fundamental obstacles preventing AI from efficiently acquiring and leveraging the necessary information to operate adaptively, intelligently, and reliably in real-world, dynamic environments [3,44,52].

The manifestations of KAB can be categorized into several distinct types, reflecting the multifaceted nature of knowledge acquisition:

1. Manual Effort and Economic Costs: A dominant manifestation of the KAB stems from the extensive manual effort and high economic costs required for knowledge engineering. This includes the labor-intensive process of data labeling and annotation, which is often expensive, time-consuming, and prone to errors [3,6,10,14,16,18,19,21,25,31,41,51,57,72,75]. For instance, populating leaderboards for machine learning research requires “laborious and error-prone” human annotation of results [41], and creating high-quality sense-annotated corpora for Word Sense Disambiguation (WSD) is “laborious and expensive” [10]. Similarly, in the clinical NLP domain, manual chart review for extracting medical events is “prohibitively expensive” [21]. The elicitation of well-specified prior distributions from human experts for predictive models is also a “challenging, costly, or infeasible” endeavor, especially in low-resource settings [19]. For Large Language Models (LLMs), prompt engineering demands “heavy dependence on manual expertise” [43,44]. The high costs extend to physical robot maintenance, which limits the number of training episodes and necessitates constant human oversight during learning, contributing to resource constraints [64]. In adversarial model extraction, the cost of model extraction queries, billed pro rata, imposes strict budget constraints, compelling adversaries to minimize API calls [15,45].

2. Data Scarcity and Quality Issues:

A prevalent bottleneck is the scarcity of sufficiently large and high-quality labeled data, hindering model training and generalization. This data scarcity is noted across various domains: for large-scale labeled data requirements in supervised fine-tuning (SFT) of LLMs [44], for new resources in Knowledge Graph (KG) construction [74], for full-text scientific articles and novel facets in scholarly information extraction [51], and for low-frequency knowledge in LLMs due to insufficient representations [34]. Robotics faces data scarcity due to physical constraints, wear-and-tear, and high maintenance costs [64]. Clinical NLP for rare events like hypoglycemia also struggles with data scarcity for training robust models [21].

Beyond mere quantity, data quality is critical. Issues like manual annotation inconsistencies or errors lead to lower quality training data and significant performance drops; for instance, models trained on single-annotated data for Named Entity Recognition (NER) show a 9.8% F-score drop compared to double-annotated data [25]. The vast majority of experimental data in scientific domains remains “locked in semi-structured formats” like tables and figures, creating a computational challenge for knowledge extraction [65]. Even large KBs can be “error-prone and have lot many facts that are still missing” [9], highlighting knowledge incompleteness and data sparsity in structured forms.

3. Modeling Complexity and Architectural Mismatch:

This type of KAB arises when current AI models struggle to process, represent, or reason with knowledge due to limitations in their design or the inherent complexity of the knowledge itself. The “modeling bottleneck” is evident in classical planning, where autonomously acquiring classical planning models from subsymbolic inputs faces the “Symbol Stability Problem” (SSP). This issue, caused by systemic and statistical uncertainties, results in unstable latent representations that degrade search performance, disconnect the search space, and complicate hyperparameter tuning [28]. Similarly, in Knowledge Tracing (KT), traditional methods use “opaque latent embeddings” lacking interpretability, while LLM-based explanations can “hallucinate,” compromising reliability and preventing actionable insights for educators [50].

LLMs, despite their capacity, frequently struggle with structured relational knowledge, leading to suboptimal results on knowledge-intensive tasks [12,42]. They often fail to “fully exploit relational patterns” for complex or multi-hop reasoning, contributing to factual errors and hallucinations [30,71]. This architectural mismatch is also evident in integrating structured KGs with free-form text [1] and in LLMs’ inability to adequately process granular external knowledge, often treating it “as functionally equivalent to random noise” [7]. The difficulty in handling highly flexible domain-specific terminology (e.g., in clinical notes or operations and maintenance records) further exemplifies this modeling challenge, as manually specifying rules becomes impossible [21,48]. Agentic systems also face architectural issues like “Task-Specific Experience Isolation” and “Single-Level Retrieval Granularity,” preventing knowledge transfer and efficient adaptation across tasks [33].

4. Dynamic Knowledge Maintenance and Forgetting:

A critical challenge, particularly for LLMs, is their reliance on “static, pre-trained knowledge” that becomes “outdated or insufficient when confronting evolving real-world challenges” [5,20,30,44,68]. This leads to hallucinations and unreliable performance [5]. LLMs struggle to “dynamically update and extract newly acquired factual information” even after continued pre-training, exhibiting “impaired question-answering (QA) capability” and “constrained knowledge retrieval” [68].

Knowledge forgetting is a significant sub-category. Catastrophic forgetting occurs when models lose previously acquired knowledge upon learning new tasks, a pervasive issue in continual learning [5,26,44,67]. This is quantitatively measured by knowledge entropy decay during pre-training, where LLMs show a consistent decline in the range of memory sources utilized, leading to reduced ability to acquire new knowledge and increased forgetting rates in continual learning scenarios [69]. For instance, a strong Pearson correlation of 0.94 between knowledge entropy and acquisition, and -0.96 with forgetting, highlights this phenomenon [69]. This issue is exacerbated by LLMs’ lack of an inherent mechanism to memorize newly retrieved information, necessitating repeated retrieval for future use [27]. In robotics, dynamic robot properties like wear and temperature changes make learned behaviors quickly outdated, demanding dynamic knowledge maintenance [64].

5. Interpretability and Representation Bottlenecks:

This KAB arises from the opacity of AI systems’ internal workings, preventing understanding of how knowledge is used. In knowledge tracing, interpretability is crucial as predictive accuracy alone can be misleading [50]. LLMs, despite their knowledge, struggle with self-awareness and human-level explanations, lacking “semantically interpretable knowledge resources” crucial for action justification [58]. This lack of introspectability makes it difficult to ensure reliability, detect misuse, and debug flawed reasoning [32,36]. In scientific domains, highly accurate ML predictions from satellite imagery often fail to yield “scientific insight” or new knowledge because the models cannot explain why predictions are made, hindering understanding of causal relationships for social scientists and policymakers [39].

6. Human-AI Knowledge Gap:

A distinct KAB emerges when AI systems, particularly those achieving superhuman performance through self-play (e.g., AlphaZero), develop reasoning principles and concepts that are not derived from or constrained by human knowledge. This “human-AI knowledge gap” ($M-H$ set) represents machine-specific knowledge ($M$) not yet part of human understanding ($H$) [54]. Such a gap is evident when AI decisions are initially incomprehensible to human experts but are later validated as superior, limiting human experts’ ability to leverage AI insights [54]. This gap is also observed when LLMs struggle with polysemous terms and domain-specific terminology, requiring ontological grounding to prevent hallucinations and ensure correct interpretation [70]. Furthermore, the lack of universal measurement standards across diverse feature distributions can lead to AI misidentifying objects, as it hasn’t learned the “differences in feature distributions between knowledge systems” [37].

7. Scalability and Computational Limitations:

The sheer volume of data and the computational demands of modern AI systems pose significant KABs. The “explosion in the number of machine learning publications” makes manual tracking of state-of-the-art “unsustainable over time” [11,41]. In KG construction, manual approaches are not scalable for continuous updates from large, diverse data sources, leading to inefficient learning paradigms and high re-computation costs [3,49]. LLMs face significant computational and memory requirements, along with massive data demands for training, which complicate deployment on edge systems [8]. High computational costs for Reinforcement Learning (RL) and lengthy training processes for SFT are also noted [44]. In robotics, the “curse of dimensionality” poses scalability issues for high-DOF robots, and real-time execution limits learning speed [64].

8. Uncertainty and Generalization Issues:

Knowledge acquisition is bottlenecked by environments characterized by uncertainty, partial observability, and difficulties in generalizing knowledge. In open-world settings, significant differences in data distribution and feature representation lead to poor generalization for models trained on one domain and applied to another [37]. This is further complicated by “insufficient confidence in new knowledge” and challenges in incremental recognition with infinite label spaces [37]. RL systems face KABs from “sparse rewards, partial observability, and vast state spaces,” making efficient exploration difficult and resulting in a “vanishing probability of reaching the goal randomly” [35,56]. Knowledge is also bottlenecked by its “invariance” to skill, data distribution (Out-of-Distribution generalization), and data syntax, where models fail to adapt knowledge across varying contexts [47]. Within KG construction, uncertainty management is a major KAB, as KGs suffer from “knowledge deltas” arising from invalidity, vagueness, fuzziness, timeliness, ambiguity, and incompleteness, which impact KG quality and downstream applications [55].

Acquired Knowledge Gap vs. Utilized Knowledge Gap in PLMs:

| Aspect | Acquired Knowledge Gap | Utilized Knowledge Gap | Implication for KAB |

|---|---|---|---|

| Definition | Missing facts in the model’s parametric knowledge. | Inability to effectively apply already acquired knowledge in downstream tasks. | Model knows but cannot use its knowledge effectively. |

| Quantifies | What the model knows. | How much of that knowledge can be effectively used. | Reveals a disconnect between presence and applicability. |

| Measurement Example | Top-1 accuracy on diagnostic fact-checking datasets. | Performance drop on tasks designed from the model’s known facts. | Traditional metrics may overestimate true capability. |

| Manifestation | Model lacks specific factual information. | Knowledge is present but deployment is ineffective, inflexible, or sub-optimal. | Even with knowledge, system is unreliable or inefficient. |

| Observed in PLMs | Larger models acquire more facts. | Persists even with larger models, suggesting internal limitations (e.g., inductive biases). | Scaling alone is insufficient to close the utilization gap. |

| Contribution to Hallucination | Model invents missing facts due to lack of knowledge. | Model fails to apply correct knowledge, leading to incorrect generation despite internal presence. | Both contribute to unreliability and factual errors. |

Pre-trained Language Models (PLMs) exhibit a crucial distinction in their KAB: the “acquired knowledge gap” and the “knowledge utilization gap” [53]. The acquired knowledge gap refers to the missing facts in the model’s parametric knowledge, quantifying what the model knows (e.g., top-1 accuracy on diagnostic fact-checking datasets) [53]. In contrast, the knowledge utilization gap signifies how much of the already acquired parametric knowledge can be effectively utilized in downstream tasks. This gap manifests as a significant performance drop on tasks specifically designed from the model’s known facts, suggesting insufficient knowledge utilization even when knowledge is present internally [53]. For instance, a model like RoBERTa might acquire more facts than GPT-Neo but achieve similar downstream performance due to a larger utilization gap, implying an inability to apply stored knowledge effectively to relevant tasks [53]. This distinction is robust across different knowledge base sizes and negative sampling strategies, indicating inductive biases within the model or fine-tuning process as potential culprits [53]. This problem is further compounded by “knowledge conflicts,” where LLMs might favor internally memorized (potentially outdated) knowledge over explicitly provided, correct external context, creating a “knowledge acquisition-utilization gap” where external information is available but not effectively applied [20,36,44].

Knowledge Forgetting and LLMs’ Dynamic Knowledge Challenges:

Knowledge forgetting in AI systems, particularly LLMs, is defined as the degradation or loss of previously learned information upon continuous learning or exposure to new data. Beyond catastrophic forgetting, recent studies have introduced quantitative measures. One such measure is knowledge entropy, a metric derived from the feed-forward networks (FFNs) of LLMs, which quantifies the range of memory sources a model utilizes. A decline in knowledge entropy during pre-training, consistently observed in models like OLMo and Pythia, suggests that models increasingly rely on a narrower set of active memory vectors, leading to reduced ability to acquire new knowledge and increased rates of forgetting in continual learning scenarios [69].

These internal dynamics profoundly impact LLMs’ ability to continuously update and integrate dynamic world knowledge. LLMs are characterized by their static nature post-training, making rapid adaptation to novel tasks in dynamic environments challenging [44,68]. They struggle to extract newly acquired factual information, even after continuous pre-training on new corpora, leading to impaired QA performance and constrained knowledge retrieval [68]. This manifests as initial closed-book evaluations showing “extremely low performance” (e.g., 2% Exact Match) for new knowledge, indicating LLMs possess virtually none of the target domain’s new facts [68]. The “abnormal learning behavior” of LLMs, where fine-tuning for QA tasks can lead to forgetting original biographical text, underscores the fragility of parametric knowledge and the complex interplay between different knowledge representations [73]. The challenge lies in how LLMs internalize new information and structurally integrate it, with acquisition efficiency being influenced by the relevance and frequency of new knowledge to pre-existing knowledge [34].

Communalities, Nuances, and Critical Assessment:

Across these diverse manifestations, a common thread is the significant economic and practical burden they impose. This includes substantial labor costs, prolonged development times, diminished system reliability, and underutilized AI capabilities. The sheer scale of data in modern systems leads to scalability challenges, whether in tracking scientific literature (e.g., over 33,000 ML papers in 2019, growing 50% yearly [41]) or managing petabytes of operational data.

However, each domain presents specific nuances:

- LLMs: The KAB is deeply intertwined with their parametric knowledge storage, statistical learning capabilities, and the inherent trade-off between broad generalization and deep domain-specific expertise. Their susceptibility to

hallucination,knowledge conflicts,limited context windows, andknowledge forgettingare architectural shortcomings [12,30,36]. The critique for LLMs often centers on the difficulty in quantifying the “cognitive gap” or the exact severity of knowledge fragility [12,30]. - Knowledge Graphs: The core KABs are related to

incompleteness,data heterogeneityfrom diverse sources,error propagationin construction pipelines, and the constant need for dynamic maintenance and quality control from potentially noisy facts [3,23,55]. The theoretical foundation often involves symbolic representation and formal logic, which contrasts with the statistical nature of LLMs. - Robotics: KABs are significantly influenced by physical constraints, high costs of real-world interaction, the

reality gapbetween simulation and reality, and the need to cope with partial observability and uncertainty in unstructured environments [64]. This often highlights the challenges of grounding abstract knowledge in embodied agents. - Model Extraction: The KAB is defined by an adversarial context, where the “adversary lacks access to the secret training dataset” and faces severe query budget constraints [15,45]. This necessitates active learning strategies to optimally select samples for querying.

- Knowledge Tracing: The primary bottleneck is the

interpretabilityof student knowledge states, moving beyond opaque latent embeddings to actionable insights for human educators [50].

Critically, many analyses, while effectively identifying KABs, often lack a broad theoretical exploration beyond their specific domain, or quantitative measures of the bottleneck’s severity in terms of universal costs, time, or performance impacts before applying their proposed solutions [5,6,10,11,12,14,16,19,21,23,25,28,29,30,37,39,40,41,42,44,48,51,57,59,62,64,65,67,68,71,74]. Future research should focus on developing standardized, quantifiable metrics for KAB severity to enable more robust comparative analyses across AI paradigms and to provide clearer targets for mitigation efforts. The challenge of how to effectively bridge the human-AI knowledge gap, instill true logical reasoning in LLMs, and efficiently integrate dynamic, multi-modal knowledge remains an open and critical area for investigation.

2.2 Underlying Causes and Limitations of Traditional Approaches

The Knowledge Acquisition Bottleneck (KAB) poses a persistent challenge across diverse domains of artificial intelligence, rooted in fundamental limitations of traditional methodologies and emerging issues within modern paradigms like Large Language Models (LLMs). These limitations stem from inherent assumptions, architectural choices, and domain-specific adaptations that collectively impede the efficient, scalable, and reliable acquisition, representation, and utilization of knowledge.

A primary and pervasive root cause of the KAB lies in the labor-intensive processes that necessitate extensive human domain expertise, impose significant cognitive load, and make formalizing complex knowledge exceedingly difficult. This bottleneck manifests acutely in several areas:

- Manual Knowledge Engineering and Annotation: Constructing Knowledge Graphs (KGs) traditionally demands substantial manual intervention for identifying relevant sources, defining ontologies, mapping data, and validating quality [3]. This manual effort is costly, time-consuming, and resource-intensive, requiring specialized domain experts [3,4,6,10,12,14,17,19,25,28,29,36,55,63,72]. For instance, rule-based systems in information extraction (IE) are difficult to scale due to the impossibility of manually specifying rules for all patterns in flexible language, such as clinical notes [9,21]. High-quality prompt engineering for LLMs similarly introduces a time-consuming and laborious manual effort, akin to traditional knowledge engineering [12,43].

- Scalability Issues: The inherent labor-intensiveness of manual annotation and rule definition severely limits scalability, making these approaches impractical for large volumes of data or rapidly evolving knowledge domains [3,8,10,11,12,21,23,31,32,38,41,51,55,59,63,65,66,67,75]. This is particularly problematic for ontology evolution, which struggles to keep pace with the growth of unstructured information [17].

Within LLMs, two theoretical perspectives shed light on the internal dynamics governing knowledge integration and loss. The concept of “knowledge circuits” proposes that knowledge acquisition within LLMs is driven by “topological changes” and the cooperative interplay between multiple components like attention heads and MLPs, rather than isolated knowledge blocks [34]. This theoretical foundation suggests a complex, distributed representation of knowledge. Conversely, the empirical observation of “knowledge entropy decay” during language model pretraining reveals that as training progresses, the distribution of memory coefficients in Feed-Forward Network (FFN) layers becomes sparser, leading to reliance on a limited set of memory vectors [69]. This reduced plasticity makes efficient new knowledge acquisition difficult and increases the risk of catastrophic forgetting, where new information overwrites existing, frequently used knowledge [5,20,26,36,67]. A consolidated view suggests that while LLMs form intricate “knowledge circuits” through topological changes to encode information, the continuous decay of “knowledge entropy” limits the dynamic range and plasticity of these circuits. This makes it challenging to form new, stable circuits for novel knowledge or to efficiently update existing ones without destabilizing previously learned information, thus contributing to the KAB in LLMs.

Prior methodological limitations in evaluating knowledge utilization have often obscured the true nature and persistence of the KAB in pre-trained language models (PLMs). Confounding factors, such as “shortcuts or insufficient signal” from “arbitrary crowd-sourced tasks” and the use of synthetic datasets that are not comprehensive or dynamic enough, have led to misleading assessments of knowledge acquisition and utilization [20,43,53,60,73]. This results in a “knowledge acquisition-utilization gap,” where models possess knowledge internally but fail to effectively leverage it for downstream tasks or novel scenarios [7,27,42,49,68].

Theoretical explanations for the KAB vary significantly across different AI paradigms:

- Reinforcement Learning (RL): KAB in RL is attributed to challenges such as sparse rewards, vast state spaces, partial observability, and the inherent difficulty of exploration and policy generalization [56]. Traditional exploration methods often lack the ability to leverage experience from previous tasks, preventing efficient knowledge transfer and adaptation to new environments [56].

- Educational AI: Knowledge representation in educational AI faces interpretability and reliability issues. Traditional Knowledge Tracing (KT) methods represent student knowledge as “opaque, high-dimensional latent vectors,” hindering actionable insights for educators [50]. Newer LLM-based approaches, while promising, risk “hallucination” and lack accuracy guarantees, compromising their reliability for educational tools [2,50]. Traditional methods also struggle with non-personalization, predefined concepts, static knowledge assumptions, and difficulty modeling non-graded learning materials, leading to data scarcity for explicit knowledge gain signals [22].

- Deep Learning (DL): A major limitation is its pervasive reliance on large volumes of meticulously annotated examples [16]. This “extreme data-dependence” leads to scalability issues for manual annotation, making data acquisition both time-consuming and expensive [8,25,57]. Weak supervision methods often produce incorrect or inconsistent labels, and joint inference involves significant modeling complexity and computational intractability [16].

- Full Text Processing: In scholarly information extraction, traditional approaches often relied on partial text (e.g., abstracts), missing crucial details only present in full texts [51]. Existing neural models also faced data scarcity and architectural mismatch for comprehensive full-text processing, struggling with document-level context and long-range relations [11,51].

- Competency Question Generation: The KAB here is characterized by the labor-intensive manual formalization of competency questions and their validation, coupled with a lack of standardized evaluation benchmarks and reusable datasets, constituting a significant methodological flaw [14].

KAB Root Causes and Limitations by AI Paradigm

| AI Domain / Area | Primary Root Causes of KAB | Limitations of Traditional Approaches (Pre-LLM) | Emergent LLM Limitations (if applicable) |

|---|---|---|---|

| General Knowledge Engineering | Labor-intensive manual processes, High human expertise dependency, Cognitive load for formalization | Costly, Time-consuming, Resource-intensive, Not scalable for evolving knowledge | Manual prompt engineering, Sensitivity to phrasing |

| Knowledge Graphs (KGs) | Manual schema definition/population, Data heterogeneity, Error propagation in pipelines | Scalability issues for continuous updates, Lack of comprehensive entity fusion/quality assurance | Hallucination, Reliability issues for generative KGC |

| Reinforcement Learning (RL) | Sparse rewards, Vast state spaces, Partial observability, Difficulty of exploration/policy generalization | Inability to leverage experience from previous tasks (transfer learning) | - |

| Educational AI | Opaque knowledge representation, Difficulty modeling non-graded learning, Student selection bias | Limited interpretability of student knowledge states (latent vectors), Static knowledge assumptions | Hallucination in LLM explanations, Lack of accuracy guarantees |

| Deep Learning (DL) | Extreme data dependence, Need for meticulous annotation | Scalability issues for manual annotation, Noisy/inconsistent labels from weak supervision | Computational cost, Data dependency on massive datasets |

| Full Text Processing (e.g., Scientific) | Manual tracking of state-of-the-art, Extracting complex technical facets | Reliance on partial text (e.g., abstracts), Architectural mismatch for document-level context | - |

| Competency Question Generation | Labor-intensive manual formalization/validation, Lack of standardized evaluation/reusable datasets | Methodological flaws (e.g., lack of benchmarks) | - |

| Traditional Robotics | Accurate modeling of complex real-world dynamics, Hand-crafted solutions are brittle | Knowledge/Engineering/Tractability/Precision bottlenecks, Intractability of modeling soft bodies/fluids | - |

| AlphaZero (Game AI) | - | Human biases, Reliance on heuristics, Limited computational capacity (in humans) | Human-AI knowledge gap ($M-H$ set) - machine knowledge incomprehensible to humans |

| Binary Code Analysis | Compilation artifacts (function inlining, injected code), Sensitivity to compiler variations | Poor OOD generalization, Over-reliance on task-specific data, Learning “nuisance information” | Architectural mismatch for semantic understanding |

| Pre-trained Language Models (PLMs) | Knowledge circuits/entropy decay, Acquired/Utilized knowledge gap | Confounding factors in evaluation (shortcuts, insufficient signal), Synthetic datasets not dynamic enough | Catastrophic forgetting, Ineffective knowledge utilization, Hallucational reasoning, Lack of transparent reasoning |

Critically comparing root causes across different AI domains reveals distinct yet interconnected limitations:

- Traditional Robotics vs. ML: Traditional robotics, with its reliance on explicit models, encounters severe “knowledge,” “engineering,” “tractability,” and “precision” bottlenecks [64]. Accurately modeling complex real-world dynamics (e.g., soft bodies, fluids) is often intractable, and hand-crafted solutions become brittle in changing environments [64]. This contrasts sharply with machine learning’s ability to directly learn from experience and adapt to unmodeled dynamics, though sometimes at the cost of interpretability or requiring vast amounts of data [64].

- AlphaZero’s Self-Taught Capability vs. Human Bias: AlphaZero’s ability to learn strategies solely through self-play, unconstrained by human supervision, led to the discovery of “unconventional” and “unorthodox” knowledge ($M-H$ set) that human experts might overlook due to their inherent biases, reliance on heuristics, and limited computational capacity [54]. This highlights a fundamental “human-AI knowledge gap,” where human analysis can inadvertently limit the discovery of truly novel AI concepts by attempting to “shoehorn” machine knowledge into existing human frameworks [54].

- Classical Model Extraction vs. Assembly Code Analysis: Classical model extraction often necessitates a “domain knowledge requirement,” demanding access to subsets of secret datasets or hand-crafted examples, posing a significant “human expertise dependency” for an adversary [15]. In contrast, traditional learning-based approaches for assembly code analysis suffer from a lack of “human expert knowledge” integration and an over-reliance on task-specific data. This leads to severe Out-of-Distribution (OOD) generalization failures and sensitivity to compiler variations, making models inapplicable to unseen architectures and toolchains [24]. Existing models inadvertently learn “nuisance information” from training data, further hindering their transferability [24].

Across these varied applications, several cross-cutting limitations emerge. Architectural mismatch is a recurring theme, hindering the seamless integration of structured and unstructured data, preventing models from leveraging crucial structural information (e.g., in KGs), or leading to suboptimal knowledge transfer mechanisms [1,3,4,8,12,13,16,18,21,26,27,28,31,32,33,37,42,46,58,62,63,67,70,74]. This is evident in the difficulty of blending structured data with free-form text or the challenges of adapting RAG pipelines, primarily designed for unstructured text, to structured graph data [1,63]. The architectural constraints of LLMs also mean they lack substantial pre-training on graph-structured data, impairing their inherent performance on graph-related tasks [63].

Scalability and computational costs remain significant hurdles, from the batch-oriented processing of traditional KG construction leading to redundant computation [3], to the prohibitive costs of retraining massive LLMs or performing extensive human annotation [20,27,44]. Even modern methods like LLM-based interpretable models face scalability issues due to numerous costly API queries [32]. Data scarcity and dependency are equally critical, as many advanced models still require vast amounts of accurately labeled data, which is difficult and expensive to obtain, especially in domain-specific or low-resource settings [16,21,25,48,57,70].

Furthermore, the phenomena of hallucination, lack of reliability, and poor interpretability are significant limitations, particularly concerning LLMs. LLMs frequently generate texts that lack factual accuracy or contextual relevance [2,5,12,17,29,30,36,55,59,66,70]. This arises from issues such as improper learning data distribution, internal memory conflicts, and a lack of mechanisms for “mover heads” within LLMs [30]. The absence of provenance or reliability information further compromises the utility of LLM-extracted knowledge, particularly in sensitive domains [55]. Finally, OOD generalization remains a critical challenge, as models tend to overfit to specific domains, learn spurious correlations, and struggle to transfer knowledge effectively to new, unseen environments [24,27,37,42,48,70,72].

These limitations underscore the pressing need for novel frameworks and algorithms that can overcome the KAB by balancing efficiency, scalability, robustness, and reliability across the diverse landscape of AI applications. Addressing these underlying causes will require moving beyond mere incremental improvements to rethinking fundamental architectural designs, knowledge representation schemes, and evaluation methodologies.

2.3 Manifestations in Specific Domains

The Knowledge Acquisition Bottleneck (KAB) is a pervasive challenge across diverse fields of artificial intelligence, manifesting uniquely in each domain due to distinct underlying assumptions, theoretical foundations, architectural choices, and domain-specific adaptations. A comparative analysis reveals that while some challenges like data scarcity and the need for human expertise are widespread, their precise nature, severity, and optimal mitigation strategies vary significantly. This section provides a high-level overview of KAB manifestations across key AI domains, setting the stage for more detailed discussions in subsequent subsections.

In the realm of Large Language Models (LLMs), the KAB is multifaceted, primarily stemming from their reliance on massive, static pre-training corpora. Key manifestations include insufficient knowledge utilization and a persistent knowledge acquisition-utilization gap where LLMs possess encyclopedic facts but struggle to reliably apply or retrieve them in downstream tasks or novel contexts [27,53,61,68,73]. The static nature of their training data leads to outdated knowledge, hallucinations, and knowledge forgetting during continual learning or updates, where new information overwrites prior memorization [5,20,30,34,69]. Furthermore, LLMs exhibit a lack of deep domain-specific knowledge [2,4,44] and struggle with the integration and utilization of structured knowledge like that found in Knowledge Graphs (KGs), often yielding sub-optimal results and hallucinations due to architectural mismatch between textual and structured representations [1,12,17,36,42,63]. Interpretability and controllable generation remain significant challenges, particularly given the opacity of their internal decision-making processes [32,59].

In Knowledge Graphs (KGs) and Knowledge Engineering, the KAB is primarily rooted in the manual effort and human expertise required for their construction, curation, and continuous management [3,9,12,14,31,55,66]. Challenges include data heterogeneity from diverse sources, scalability issues in processing vast and dynamic information, incompleteness of real-world knowledge, and stringent quality control measures to maintain accuracy and consistency [3,11,23,31,38,40,55]. This contrasts sharply with LLMs, where the challenge is less about creating structured knowledge from scratch and more about effectively utilizing existing knowledge.

For Natural Language Processing (NLP), particularly in specialized domains like Clinical NLP, data scarcity and the high cost of human annotation are central KAB manifestations [9,16,18,19,21,25,57,72]. This is exacerbated by specialized vocabularies, inconsistent structures, rare entities, and small record sizes in fields like aviation and maintenance, leading to domain-specific knowledge gaps and poor performance of generic NLP tools [6,48,70]. In scholarly information extraction, the KAB is driven by the exponential growth of scientific literature, leading to information overload and the manual effort required to track state-of-the-art and extract complex technical facets [41,51]. Word Sense Disambiguation (WSD) similarly struggles with data scarcity for sense-annotated corpora and the noise introduced by automatically generated resources [10].

In MLaaS Security and Binary Code Analysis, the KAB is characterized by adversarial constraints and the inherent obfuscation of information. For Adversarial Model Extraction (AME) in MLaaS, the bottleneck is defined by the adversary’s lack of secret training data and the need to minimize costly queries to the proprietary service, highlighting a knowledge challenge centered on query costs and intellectual property concerns [15,45]. This presents a different manifestation than the manual model specification in classical planning. In Binary Code Analysis, the KAB stems from the profound difficulty in achieving generalizable semantic understanding due to compilation artifacts. Models struggle with data scarcity for unseen compiler variants, function inlining, and compiler-specific injected code, leading to architectural mismatch and poor generalization to out-of-domain architectures and libraries [24].

Within Robotics and Reinforcement Learning (RL), the KAB manifests as a range of challenges, including data scarcity in real-world environments, the reality gap between simulation and physical systems, and the curse of dimensionality in vast state-action spaces [35,56,64]. Other issues include uncertainty in dynamic environments, modeling complexity for deformable objects or dynamic manipulation, and safety risks associated with learning in physical systems. Interpretability of learned skills also poses a challenge [35]. This “reality gap” in robotics contrasts with the “M-H gap” in game AI, where the challenge is understanding and transferring machine-unique concepts rather than bridging physical simulation differences [54,64].

For Classical Planning and Game AI, the KAB focuses on symbolic representation and human-AI knowledge alignment. In classical planning, the KAB is primarily the manual symbolic model specification and the symbol grounding problem from raw, subsymbolic data, exacerbated by the lack of labeled action data [28,29]. This differs significantly from the difficulty in understanding internal knowledge states in knowledge tracing, which deals with student learning models [50]. In Game AI, especially with agents like AlphaZero, the KAB appears as machine-unique concepts and a human-AI discrepancy in how knowledge is interpreted and applied, making super-human strategies difficult for humans to extract and learn from [54].

In Educational Technologies and Knowledge Tracing (KT), the KAB centers on interpretability and the comprehensive modeling of student learning. Traditional KT models often rely on opaque latent embeddings that provide limited interpretability for teachers and learners, failing to offer actionable insights into knowledge gaps or misconceptions [50]. Furthermore, these models often assume quasi-static knowledge states and struggle to integrate knowledge acquired from multiple, heterogeneous learning resource types, leading to an incomplete understanding of student proficiency [22].

Beyond these major categories, KABs manifest in various other specialized domains. In Computer Vision, beyond general object recognition, manifestations include the inability to incorporate higher-level conceptual information (e.g., functionality, intentionality) and commonsense knowledge for tasks like event and scene recognition [72]. The poverty and welfare domain demonstrates a KAB not in data acquisition, but in the failure of ML models to generate scientific insights and causal understanding from satellite imagery, limiting actionable knowledge for policymakers [39]. Materials science faces challenges with data heterogeneity in semi-structured formats within scientific tables, hindering large-scale analysis of composition-property relationships [65]. Cross-domain general AI and cognitive systems face a KAB in their inability to adapt and transfer knowledge effectively across different tasks, data distributions, and syntaxes, often falling prey to spurious correlations and struggling with non-Euclidean data types [26,47].

Across these diverse manifestations, several common underlying challenges emerge: the persistent need for robust and efficient mechanisms to acquire, integrate, and dynamically update knowledge; the struggle for models to generalize beyond their training distribution or specific task formulations; the imperative for greater interpretability and explainability, particularly in sensitive domains; and the ongoing challenge of effectively bridging symbolic and sub-symbolic knowledge representations. Addressing these requires a deeper understanding of the inherent complexities within each domain, the development of architectures that can disentangle salient information from noise, and innovative frameworks for managing knowledge throughout its lifecycle.

2.3.1 Adversarial Model Extraction and MLaaS Security

Adversarial Model Extraction (AME) poses a significant challenge within the realm of Machine Learning as a Service (MLaaS) security, representing a critical knowledge acquisition bottleneck (KAB) for both adversaries and service providers. At its core, AME involves an attacker attempting to replicate a proprietary MLaaS model by strategically querying the service, thereby reconstructing a high-fidelity local replica [45]. This KAB is characterized by distinct constraints and objectives that differentiate it from other knowledge acquisition challenges, such as data scarcity in knowledge graphs (KGs) or high annotation costs in clinical Natural Language Processing (NLP) [15].

Adversarial Model Extraction KAB: Adversary vs. Provider Perspectives

| Aspect | Adversary’s KAB Challenges & Objectives | Provider’s KAB Challenges & Objectives |

|---|---|---|

| Core Goal | Replicate a proprietary MLaaS model (functional knowledge). | Protect proprietary model’s confidentiality & intellectual property (IP). |